Is the AI bubble bursting?

Sabine Hossenfelder and Gartner think so

The preceding image is the Gartner “Hype Cycle” graph for Generative AI. Gartner claims that many fads and related phenomena follow the indicated trend: Innovation, Inflated Expectations, Trough of Disillusionment, Slope of Enlightenment, Plateau of Productivity. Gartner and Sabine both argue that we have passed the peak of inflated expectations for Generative AI and are heading toward a trough of disillusionment.

Both also expect the deflation to be relatively mild compared to other burst bubbles. That’s the case, they argue, because AI is for real. It’s an important technology, but uninformed enthusiasm, specifically about Generative AI (Large Language Models) on its own, has blossomed before widespread business value has been established.

The most successful use of generative AI has been as programming assistants. As Hossenfelder says, (lightly edited)

Even coding, the best current use case for AI, isn't living up to expectations. Multiple studies have found that while AI produces a lot of code quickly, it also creates mistakes and security problems that need to be fixed down the line.

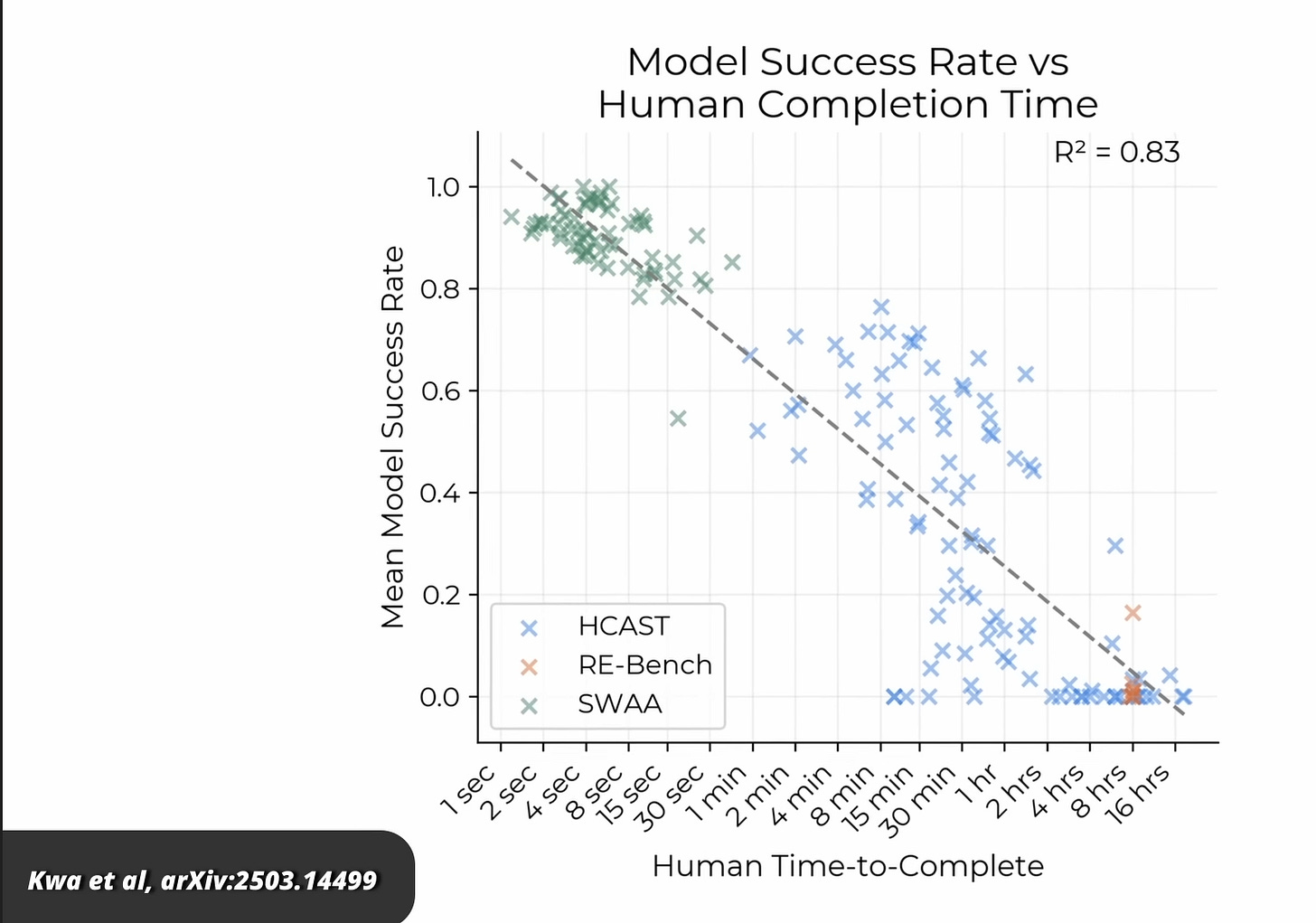

The newest study on the matter comes from METR, the model evaluation and threat research group, which found that large language models suck at completing long tasks and actually make developers slower.

If you need evidence that AI coding isn't panning out, some software engineers are now specializing in vibe-coding cleanup!

My limited experiments with coding assistants confirm this perspective.

![[Image Alt Text for SEO] [Image Alt Text for SEO]](https://substackcdn.com/image/fetch/$s_!YQmL!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fb02142a6-d4c9-4d70-a08d-f3560bef1d96_985x586.jpeg)